In response to the increasing demands of artificial Intelligence on computer networks, Princeton University researchers have, in recent years, dramatically increased the speed and reduced the energy consumption of specialized AI systems. The researchers now have co-designed hardware, software, and other tools that allow designers to incorporate these new systems into their applications.

Naveen Verma is a Princeton professor and the leader of the research team. “Software is a crucial part of enabling hardware.” “Designers can continue to use the same software system, but it will work ten times faster or more efficiently,” said Verma.

Systems made with Princeton technology can bring advanced language translators and piloting software to drones to the edge of computing infrastructure by reducing power consumption.

Verma is the director of the University’s Keller Center for Innovation in Engineering Education. This requires both efficiency and performance.

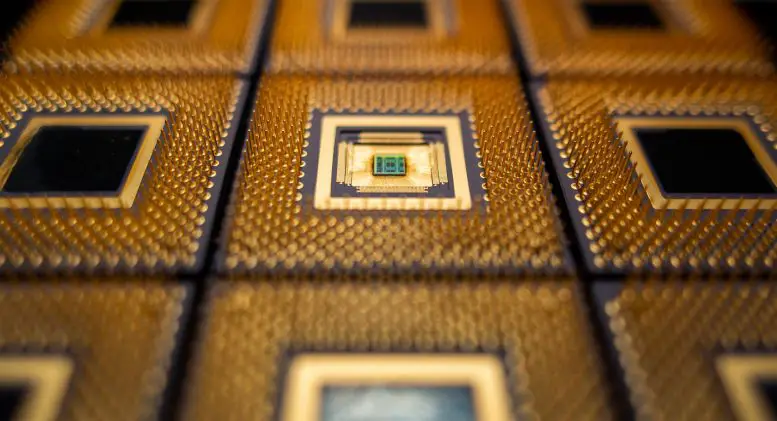

The Princeton research team created two years ago. This new chip was designed to increase the performance of the neural network. These networks are what underpin today’s Artificial Intelligence. This chip performed hundreds to thousands of times better than other advanced microchips and was revolutionary in many ways. The chip was so unique from other neural nets it presented a challenge to developers.

Verma stated in 2018 that the chip has a significant drawback because it uses an unusual and disruptive architecture. This must be reconciled to the vast infrastructure and design methodologies we currently have.

The researchers continued to improve the chip over the next two years. They created a software system to allow artificial intelligence systems to benefit from the new chip’s speed and efficiency. Hongyang Jia (a Verma research lab student) presented the latest software to the International Solid-State Circuits Virtual Conference in February 2021. He explained how it would enable the new chips to communicate with different networks and make the systems scalable in execution and hardware.

Verma stated that it is programable across all networks. “The networks can be extensive, or they can be very tiny.”

Verma’s team developed the new chip in response to the increasing demand for artificial Intelligence and the increased burden AI places upon computer networks. Artificial Intelligence is a technology that allows machines to imitate cognitive functions like learning and judgment. It plays a vital role in developing new technologies, such as image recognition, translation, and self-driving cars. The ideal computing environment for technology like drone navigation would be on the drone, not remote computers. However, digital microchips can be very demanding regarding power consumption and memory storage. This makes designing such a system challenging. The solution is to place most computations and memory on remote servers that communicate wirelessly with drones. This increases the communication system’s demands and causes security issues and delays when sending drone instructions.

Princeton researchers approached the problem in a variety of ways. The first was to create a chip that could store and conduct computations in one place. In-memory computing reduces the time and energy required to exchange information using dedicated memory. Although this technique increases efficiency, it also introduces problems. In-memory computing requires an analog operation. This is vulnerable to being corrupted by temperature spikes and voltage fluctuations. The Princeton team used capacitors instead of transistors to solve this problem. Capacitors, which store an electric charge, are more precise and less affected by voltage shifts. Also, capacitors can be placed on memory cells to increase processing density and reduce energy consumption.

But even after making analog operations robust, many challenges remained. Integrating the analog core in a digital architecture was necessary so it could be used with other functions and software to make practical systems work. Digital systems use off-and-on switches to represent ones and zeros, which computer engineers use to create the algorithms that makeup computer programming. Analog computers use a different approach. An analog computer is described by Yannis Tsividis, a Columbia University professor, in an article published in IEEE Spectrum. It is a physical system that can be controlled using equations similar to the ones the programmer desires to solve. An analog computer is an example of this: the abacus. Tsividis states that a bucket and a hose could be analog computers to solve specific calculus problems. One could use the abacus to do math or measure water in the bucket.

Through the Second World War, analog computing was the dominant technology. It could be used for everything from directing naval guns to predicting tides. However, analog systems were difficult to construct and required skilled operators. Digital systems were more adaptable and efficient after the invention of the transistor. Engineers have overcome many of the shortcomings in analog systems with new technologies and improved circuit designs. Analog systems are beneficial for applications like neural networks. The question now is how to combine both the best and worst.

Verma notes that both types of systems can be used in complementary ways. While digital systems are major, neural networks with analog chips can perform specialized operations quickly and efficiently. It is, therefore, crucial to develop a software system that integrates the two technologies seamlessly.

He said the idea was to convert only some of the network to in-memory computing. “You must integrate the ability to do all the other stuff and do it in a programable manner.”

Hossein Valavi is a Princeton postdoctoral researcher. Jinseok Lee and Murat Ozatay are graduate students from Princeton. The Princeton University School of Engineering and Applied Science supported the project through the kind generosity of William Addy ’82.